In this blog, we will learn few tips that how you can improve your website’s SEO by optimizing the robts.txt file.

Table of Contents

Actually, the Robots.txt file plays an important role in terms of SEO because it tells search engines that how your website can be crawled. This is the reason that why Robot.txt files is considered one of the most powerful tools of SEO.

Later on, you will also learn that how you can build and optimize WordPress Robots.txt for SEO.

What do you mean by robots.txt file?

The text file created by website owners to tell search engine bots that how they can crawl on their website and can index pages is known as Robots.txt

Actually, in the root directory, this file is stored. This directory is also called your website’s main folder. Mentioned below is the file format for the robots.txt file

User-agent: [user-agent name] Disallow: [URL string not to be crawled] User-agent: [user-agent name] Allow: [URL string to be crawled] Sitemap: [URL of your XML Sitemap]

To permit or dis permit particular URLs you can have different lines of instructions. Plus you can add different sitemaps too. The search engine bots will consider that they are allowed to crawl the pages if it allows a URL.

Example of robots.txt is given below

User-Agent: * Allow: /wp-content/uploads/ Disallow: /wp-content/plugins/ Disallow: /wp-admin/ Sitemap: https://example.com/sitemap_index.xml

In the above-given example, we have permitted to crawl the pages by search engines and get it index files in the folder of WordPress uploads.

After this, we have not permitted the search bots to crawl and index WordPress admin folders and plugins.

At the last, we have mentioned the URL of the XML sitemap.

For your WordPress website do you require a Robots.txt file?

The search engine will start crawling and indexing your pages even if you don’t have the text file i.e. Robots.txt. But unfortunately, you can’t inform your search engine that which particular folder or page it should crawl.

It will not profit or will not leave any impact if you have just created a website or a blog with less content.

However, once you have set up your website with lots of content and when it started growing then you will need control over crawled and indexed pages of your website.

The first reason is given below

For every website, the search bots have a quota of crawling.

This clearly means that during the crawl session, it will crawl the specific number of pages. Suppose the crawling on your website remains incomplete then it will come back one more time and will resume crawl in another session.

Because of this, the website indexing rate of your website will be a showdown.

You can fix this issue by not allowing the search bots to not crawl such pages which are not necessary. Some unimportant pages might include plugin files, WordPress admin pages, and theme folders.

The crawl quota can be saved by not allowing unwanted pages to get crawled. This step will be more helpful because the search engines will now crawl the website’s pages and will be able to index them as soon as possible.

Another reason that why you should make use and optimize WordPress Robots.txt file is given below

Whenever you need to stop search engines from indexing pages or posts on your site then you can use this file.

Actually, it is one of the most improper ways to cover your website content from the general public but it will prove as the best support to safeguard them to get appeared in search results.

How Robots.txt file appear?

A very simple robots.txt file is used by most popular blogs. The content can differ as compared to the particular website’s requirement.

User-agent: * Disallow: Sitemap: http://www.example.com/post-sitemap.xml Sitemap: http://www.example.com/page-sitemap.xml All bots will be permitted by robots.txt file to index every content and offer links to the XML sitemap's website. Following rules is mentioned that needs to follow in robots.txt files User-Agent: * Allow: /wp-content/uploads/ Disallow: /wp-content/plugins/ Disallow: /wp-admin/ Disallow: /readme.html Disallow: /refer/ Sitemap: http://www.example.com/post-sitemap.xml Sitemap: http://www.example.com/page-sitemap.xml

This will indicate the bot to index all WordPress files and images. This will not permit the search bots from indexing the WordPress admin area, WordPress plugin files, affiliate links, and the WordPress readme file.

To robots.txt files, you can add sitemaps that will help Google bots to recognize all pages on your website.

Now you are aware that how the robots.txt file looks like, now we will learn that how the robots.txt file can be created in WordPress.

In WordPress, how to build a robots.txt file?

To create a robots.txt file in WordPress there are so many ways. Some of the top methods are listed below you can choose any one of them to works best for you.

Method 1: Using All in One SEO, modifying the Robots.txt file

All in One SEO is the most well-known WordPress plugin for SEO in the market which is used by more than 1 million websites.

All in One SEO plugin is very simple to use and thus it offers the robotx.txt file generator. It is also helpful to optimize WordPress Robots.txt

If you have not integrated this SEO plugin yet, then install and activate it first from the WordPress dashboard. The free version is also available for beginner users so that they can utilize its features without investing money.

After activating the plugin you can start using it to build or modify the robots.txt file from your WordPress admin area directly.

To use it

- Go to the All in One SEO

- Now to edit the robots.txt file, click on the tools

- Now by clicking on ‘enable custom robots.txt’ you will turn on the editing option

- In WordPress, you can also build a custom robots.txt file with this toggle.

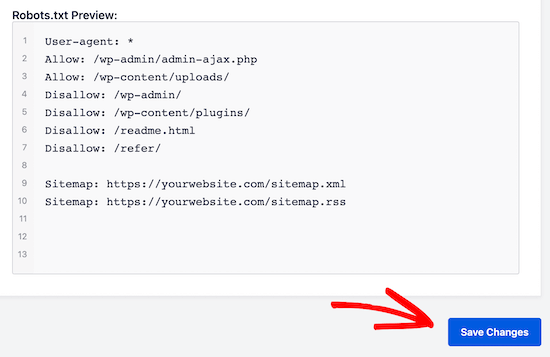

- Now your existing robots.txt file will be shown by the ‘All in One SEO plugin’ in the ‘robots.txt preview’ section. This is viewable at the bottom of your web screen.

By WordPress, the default rule which was added will be shown by this version

The default rules that get appear to suggest to your search engine that they don’t need to crawl the core WordPress files, offer a link to XML sitemap’s website, and permit bots to index all its content.

To enhance your robots.txt for SEO, new own custom rules can be added.

Add user agent in the ‘User Agent’ field, to add a rule. The rule will be applied to all user agents by using a *.

Now choose whether you are looking to permit or not permit the search engine to crawl the pages.

Now add the directory path, the filename in the ‘Directory Path’ field.

To your robots.txt, the rule will be applied automatically. Click on the ‘add rule’ button to add a new rule.

Unless you create an ideal robotx.txt format we suggest you add new rules.

The custom rules added by you will look like

To store your changes do not miss to click on ‘save changes’

Method 2: Modify the Robots.txt file manually with the help of FTP

Another method to optimize WordPress Robots.txt is to utilize the features of FTP clients to start modifying the robots.txt file

You just simply need to connect with your WordPress hosting account with the help of an FTP client

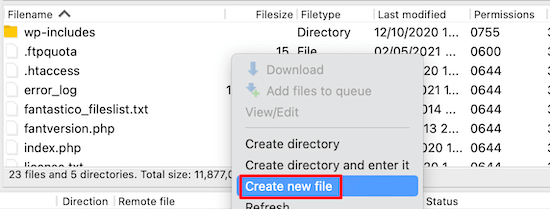

After login, in the website’s root folder, you will be able to view the robots.txt file.

If you are not able to find it that means you do not have robots.txt file

In such a situation, you need to create the robot.txt file.

As mentioned earlier the robots.txt file is usually a simple text file that can be downloaded on your computer. However, you can even modify it with the help of a plain text editor such as TextEdit, Notepad, or WordPad.

Once you have performed all changes now it’s time to save it. You can upload this file to the root folder of your website.

How you can perform testing on your Robots.txt file?

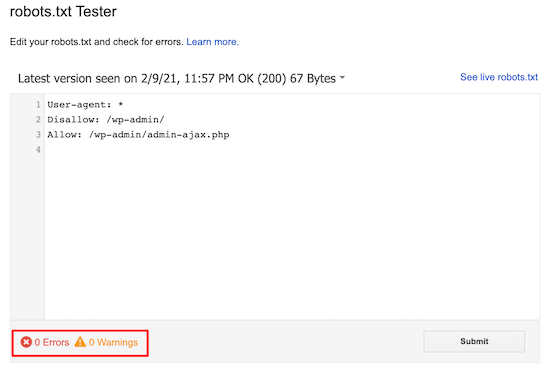

You can perform testing with the help of the robots.txt tester tool.

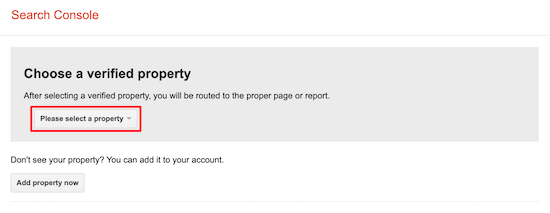

On the web, you will find a lot of robots.txt tester tools. One of the best is Google Search Console.

To work with this tool you first need to have a website that is connected with the Google search console. After this, you can start utilizing its features.

From the drop-down list, you just need to select your property.

However, it will pick the robots.txt file of your website automatically and will highlight all warnings and errors. This is one of the best tool that help you to optimize WordPress Robots.txt file very easily.

Conclusion:

The main motto of optimizing this robots.txt file is to safeguard pages from crawling the pages. For example pages in the WordPress admin folder or pages in the wp-plugin folder.

The most common myth is that you can improve the crawl rate and make it index faster and get a higher ranking by blocking the WordPress category, achieve pages, and tags.

But this will not work as it will go wrong as per Google’s webmaster guidelines.

We would highly suggest you read and consider all the points that are mentioned above.

We hope that this blog will help you to create a robots.txt file using the right format for your website. And it will help you to optimize WordPress Robots.txt.